HarpIA Lab

The evaluation tools offered by the HarpIA Lab take JSON files as input and generate JSON files as output. The internal format of each file depends on the role it plays in the evaluation, as explained below.

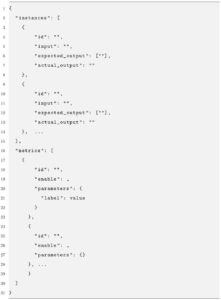

Input JSON file for submodule metrics (LLM)

This file specifies the list of instances and the list of metrics that will be processed by the HarpIA Lab evaluation tool. The list of instances contains the prompts submitted to the large language model (LLM) that is being evaluated, as well as the responses generated by the LLM and the responses expected by the researcher for each prompt. The list of metrics contains the specification of each metric (and its parameters) that the researcher wants to include in the evaluation result. It is important to note that the researcher can use the web interface of the HarpIA Lab module to build the list of metrics, but the list of instances must be built outside the HarpIA platform, using tools of the researcher’s preference.

The fields that specify an instance within the Input JSON file are:

- id: unique identifier of the test instance (chosen by the researcher);

- input: the prompt submitted to the LLM being evaluated;

- actual-output: the response generated by the LLM for the submitted prompt;

- expected-output: a list of possible responses for the prompt, according to the researcher’s judgment. These responses are used as a reference when calculating supervised metrics (see more below).

The fields that specify a within the Input JSON file are:

- id: metric identifier (metric name in HarpIA Lab Library);

- enable: flag (true or false) indicating whether the metric should be executed or not (occasionally, the researcher may want to execute the evaluation without a certain metric being computed and included in the results);

- parameters: values to be used as parameters of the metric.

The following figure shows an example input JSON file for evaluating an LLM fine-tuned to perform a translation task:

In the example, the test instance (input) is a sentence in Portuguese that has been submitted to an LLM instructed to perform a translation into English task. The expected response (expected-output) enables the execution of supervised metrics. Metrics of this kind need a reference sentence (a gold standard) that is compared with the response generated by the language model being evaluated. More than one reference sentence can be provided, and in this case, the metric calculation takes into account all reference sentences before returning the best result obtained (in favor of the model under evaluation). The response generated by the model appears in the last field (actual-output).

The input file in the example illustrates the selection of two metrics, and both are enabled. The first metric in an implementation of the BLEU metric using resources of the NLTK package. This metric requires the specification of three parameters. The second metric is an implementation of BERTSCORE metric using resources of the Hugging Face’s Evaluate package. Note that this metric requires the specification on ten distinct parameters.

Output JSON file generated by the submodule metrics (LLM)

The JSON file generated as output has a JSON object for each of the metrics selected by the researcher in the input JSON file. In each of these objects, in addition to the metric identification, there are also the parameters specified in the input JSON file that specified how the metric should be applied. The aggregated results appear under the “score” key. Note that, in the example, the values returned under the “score” key may vary from metric to metric. Finally, note that the value of the “elapsed time” field represents the time taken to compute the metric over all instances specified in the input JSON file.

Log file generated by the submodule metrics (LLM)

The log file generated as output contains a JSON object, as illustrated in the figure, in each line. This object represents the application of one of the metrics selected by the researcher to a specific instance, identified by the value of the “instance_id” field. The execution parameters of the metric, which were specified in the input JSON file, are reproduced here. Also, note that the “result” key contains the values returned by the metric in a format that is similar to the content of the “score” key that appears in the output JSON file. Finally, it is worth mentioning that not all metrics create log records at the instance level.