Projeto Música Reativa

Introduction

We have been working on an alternative concept for designing music processing software inspired by how interactions occur in chemical reactions. In a musical reactor one musical substance reacts to another musical substance producing a third one, which inherits properties and contents of the original sound materials, and some residuals. This novel concept aims to accommodate distinct types of interactive behavior, as, for example, the capacity of a sound to react both to other sounds – as musical reagents – and to the user commands during a performance.

A series of design principles, ways to control musical reactions, and forms to map chemical concepts into music processing have been proposed, implemented and tested since the start of the project back in 2013. This includes, for example, means to derive a measure for a sound’s musical reactivity to another, and the proposal of a musical stoichiometry to proportionate a musical balance between the reagents and their products. In this spirit, one can not forget Lavoisier’s lesson that the musical components participating in a musical reaction are not destroyed but go through a rearrangement – a transformation to other products.

The project has been producing a series of useful and intriguing computational tools [1] in each of its phases, integrating a library called “REACTIVE”. The first tool was aimed to develop a dynamic processor and mechanisms to control its operation, and we ended up developing a kind of a progressive harmonic filter capable of processing simultaneously some harmonics of a sound, which could be in turn defined both by the user or by a harmonic series extractor. For a second tool we were interested in exploring musical ways to “combine” or “transform” two sounds into another, i.e. the functional behind the reaction. This phase lead to the development of a time-pitch processor based on granular synthesis – the timepitch object. The third phase aimed to investigate the catalysis for musical applications and concepts for musical reactors. In this phase we explored algorithms to balance reagents, and we derived a musical reaction equation, whose stoichiometric parameters are derived with the help of sound descriptors probing the reagent sounds.

Software suite

During this journey aiming at the development of such musical processors, a series of auxiliary functionals have been developed, as they were needed in the process, being proposed and tested along the project. These tools mainly aim to deliver means for composers and musical artistic directors to command a musical performance in real-time. First, one must address the way sound parameters modify other sound parameters, and that multiple mappings may coexist. We use a reaction curve to realize this mapping. Second, to monitor what is happening at any moment in a sound reaction chain, one has to pay attention to simple procedures that permit awareness of active processes, such as the use of activity alerts and visual cues. Usability principles are mandatory and greatly affect the design of interfaces.

In the year of 2021 we released the first experimental distribution on Github, to openly share all those objects with the public. Revised to their latest versions, both main and auxiliary objects are included, with a “help” patch for each one, describing their features and usage form. The help objects aids the user for their applicability and usage, as every patch of this type comes with a brief documentation.

Plug-in development

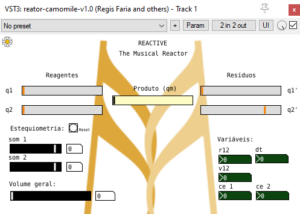

Since 2020 efforts are underway to develop a VST plugin for the Reactor patch. A Camomile VST3 [3] was compiled along with specific “externals” to behave as a host for the Reactor patch itself. The patch’s canvas turns into the GUI used in the plugin, as we can see in the image below:

Reactor VST3 prototype running on Reaper (v6.23) DAW

Main sound processors:

Reactor

In order to simulate a reaction out of two sounds resulting in a new one, the reactor maps stoichiometry concepts to a musical context. In the background, the program features spectral and temporal sound descriptors along with modeled equations, so as to extract relevant data from sounds. Created primarily using Pure Data [2] (Pd), the patch allows users to load sounds and offers controls to manage sound proportions and volume for both reagent and product sounds.

In order to simulate a reaction out of two sounds resulting in a new one, the reactor maps stoichiometry concepts to a musical context. In the background, the program features spectral and temporal sound descriptors along with modeled equations, so as to extract relevant data from sounds. Created primarily using Pure Data [2] (Pd), the patch allows users to load sounds and offers controls to manage sound proportions and volume for both reagent and product sounds.

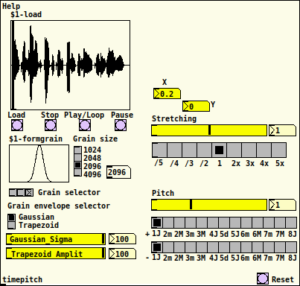

Changes the duration and/or the pitch of any sound objects. One can stretch or shorten the duration of the signal, and independently alter its pitch. Such effect often occurs in political advertisements, when there is a short time for presentation and a speech

Changes the duration and/or the pitch of any sound objects. One can stretch or shorten the duration of the signal, and independently alter its pitch. Such effect often occurs in political advertisements, when there is a short time for presentation and a speech

is accelerated so as to fit in the desired time. The object permits frequency steps in musical intervals and time steps in power-of-2, as in Music.

An implementation of band-pass filters that can be connected in parallel or in series. Their central frequency (fc) and tuning parameter (Q) can vary in time and tune to specific harmonic partials of the sound. The progressive filter module combines seven different types of filters. It also includes a fundamental (f0), a RMS level indicator (VU), a gain controller and an eight partials frequency selector.

An implementation of band-pass filters that can be connected in parallel or in series. Their central frequency (fc) and tuning parameter (Q) can vary in time and tune to specific harmonic partials of the sound. The progressive filter module combines seven different types of filters. It also includes a fundamental (f0), a RMS level indicator (VU), a gain controller and an eight partials frequency selector.

Auxiliary Objects:

These patches plays distinct roles when it comes to their usage inside the Reactive distribution. Objects such as

Patchbay and Nano are applied for enhancing live performance. Antideadlock, voluminho, voluminhost and Faixa integrate the distribution, being used inside the main objects structures in order to simplify processes, acting as subpatches. Datatrack, progcurve and instr are useful when it comes to manipulating both data and signal.

| Name | Description |

|---|---|

| Datatrack | Data recorder and player. |

| Progcurve | Programable curve for recording/playing data. |

| Instr | Instrument simulator. |

| Ponta | Probe for verifying signal points in patches. |

| Nano | Tool to expand Korg Nanokontrol2 surface controlability. |

| Antideadlock | Antideadlock for sliders. |

| voluminho | Mono volume slider with on/off button. |

| voluminhost | Stereo volume slider with on/off button. |

| Faixa | Range converter. |

If you want more information, please visit the project page at github or email us. The project welcomes new collaborators, please get in touch if you want to join the team. If you are a student, the project offers regular scholarships oriented to specific developments.

References and published articles

1. FARIA, R. R. A.; CUNHA JUNIOR, R. B. ; AFONSO, E. S. Reactive music: designing interfaces and sound processors for real-time music processing. In: Proceedings of the 11th International Symposium on Computer Music Multidisciplinary Research (CMMR 2015), Plymouth, 2015. p. 626-633.

2. Miller Puckette. Pure Data Website for documentation.

3. Pierre Guillot. Camomile: Creating audio plugins with Pure Data. Linux Audio Conference, Jun 2018, Berlin, Germany.

4. FARIA, REGIS ROSSI A.; LAVANDEIRA, G. O. ; SULPICIO, E. C. M. G. Percussão múltipla sob interatividade reativa e projeção espacial. In: 14o Encontro Internacional de Música e Mídia, 2018, São Paulo. Anais, 2018.

5. CAROSIA, R. O. ; FARIA, R. R. A. Música reativa – Controladores musicais para interação e reatividade. In: 23o Simpósio Internacional de Iniciação Científica e Tecnológica da USP, 2015, Ribeirão Preto. Anais do Simpósio Internacional de Iniciação Científica e Tecnológica da USP. São Paulo: Universidade de São Paulo, 2015. v. 23.

6. AFONSO, E. S. ; CUNHA JUNIOR, R. B. ; FARIA, R. R. A. Sound processors for live performance. In: 14º SBCM – Simpósio Brasileiro de Computação Musical, 2013, Brasília. Anais do 14º SBCM – Simpósio Brasileiro de Computação Musical. Porto Alegre: Sociedade Brasileira de Computação – SBC, 2013. p. 197-200.

7. CUNHA JUNIOR, R. B. ; AFONSO, E. S. ; FARIA, R. R. A. Suite de software para música reativa. In: 11o Congresso de Engenharia de Áudio e 17a Convenção AES Brasil EXPO 2013, 2013, São Paulo. Anais do 11o Congresso de Engenharia de Áudio da AES Brasil. Rio de Janeiro: Sociedade de Engenharia de Áudio, 2013. p. 6-10.